News - ChatGPT is taught to flirt, solve math equations

Business Strategy

ChatGPT is taught to flirt, solve math equations

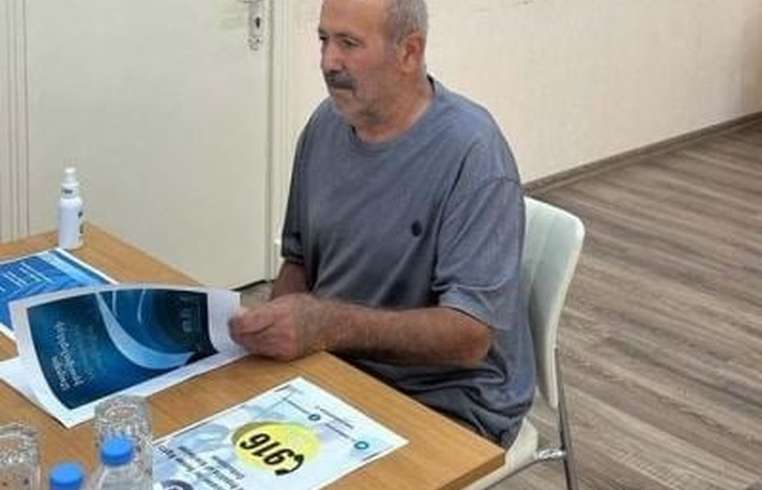

OpenAI has unveiled the latest version of the tech which underpins its AI chatbot ChatGPT, reports ( https://www.bbc.com/news/articles/cv2xx1xe2evo ) the BBC. It’s called GPT-4o, and it will be rolled out to all users of ChatGPT, including non-subscribers. It is faster than earlier models and has been programmed to sound chatty and sometimes even flirtatious in its responses to prompts. The new version can read and discuss images, translate languages, and identify emotions from visual expressions. There is also memory so it can recall previous prompts. It can be interrupted and it has an easier conversational rhythm—there was no delay between asking it a question and receiving an answer. During a live demo using the voice version of GPT-4o, it provided helpful suggestions for how to go about solving a simple equation written on a piece of paper - rather than simply solving it. It analyzed some computer code, translating between Italian and English and interpreted the emotions in a selfie of a smiling man. Using a warm American female voice, it greeted its prompters by asking them how they were doing. When paid a compliment, it responded: “Stop it, you’re making me blush!”. But it wasn’t perfect. At one point it mistook the smiling man for a wooden surface, and it started to solve an equation that it hadn’t yet been shown.